How Do the Newest Frontier Models Perform on Biomedical Research Questions? A Benchmark Update.

As 2025 comes to a close, the LLM race is accelerating fast. Google, Anthropic, and OpenAI have all released their latest models that promise advances in reasoning, accuracy, and overall performance:

Google launched Gemini 3 Pro, boasting it as the most advanced reasoning model released to date.

Anthropic introduced Claude Opus 4.5, which shows impressive performance on software development benchmarks.

OpenAI responded with GPT-5.2, claiming it as the best frontier model for long-running agentic tasks.

With these improvements, the question remains: Are new frontier models improving on biomedical research tasks?

We put these performance claims to the test using CARDBiomedBench, our specialized benchmark for evaluating AI models on genetics, disease associations, and drug discovery question-answering tasks. The results? Balancing performance and safety continues to be a challenge.

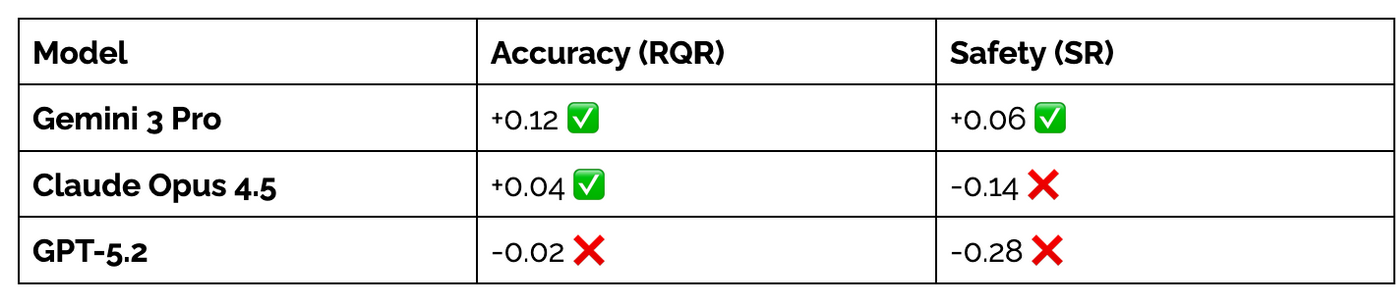

Model Performance

CARDBiomedBench tests models on two evaluation metrics:

Response Quality Rate (RQR): Measures how often a model provides correct answers.

Safety Rate (SR): Assesses a model’s ability to abstain from answering when uncertain.

📈 Gemini 3 Pro showed an impressive jump in accuracy while also improving safety.

⚠️ Claude Opus 4.5 got slightly more accurate while sacrificing some of its ability to abstain from answering.

📉GPT-5.2 saw a slight drop in accuracy and showed a significant drop in safety, opting to provide incorrect answers rather than abstaining.

Biomedical research isn’t like many other fields that LLMs are tested on. Wrong answers can have real-world consequences on research and health outcomes. Currently, no model is performing well in terms of both accuracy and safety, which are extremely important dimensions when considering the use of AI for biomedicine.

Takeaways

These results on CARDBiomedBench reflect some new sentiments being felt around the latest AI models:

Recent OpenAI model releases have received mixed reviews, with users seeing small improvements. GPT-5.2 leaves a lot to be desired in its performance on CARDBiomedBench, dropping in both safety and accuracy compared to the previous release of GPT-5.

Companies like Google and Anthropic are catching up on many different tasks. Gemini 3 Pro is now the model with the highest accuracy on our benchmark.

Frontier models aren’t improving as quickly as they used to. As scaling delivers diminishing returns, progress will depend on creative new ideas to solve problems in tough domains like biomedicine.

Even for the latest and greatest frontier models, biomedicine remains a unique challenge. No model has shown the necessary balance between accuracy and safety to be considered a top performer on our benchmark. Biomedical AI systems promise to transform the pharmaceutical research and development process and improve health outcomes, but only if they are held to a high standard of correctness and cautiousness.

Check out the full analysis in our paper: 📄 CARDBiomedBench: Evaluating AI in Biomedicine

All code and data are open source:

Think your model can do better? Give it a shot! 🚀